Keep Generative AI Spend in Check With LLM Cost Guard

Monitoring costs tied to large language models (LLMs) becomes critical as Generative AI projects become more complex. LLM Cost Guard enables effective tracing and monitoring of LLM usage, including ready-to-use dashboards to better anticipate Generative AI costs.

Get Visual, Fine-Grained LLM Cost Monitoring

As organizations embark on multiplying Generative AI use cases, they need to be thinking as much about costs as they are about the quality and performance of the models. Enter: LLM Cost Guard.

IT teams can analyze up-to-the-minute data on usage across the LLM lifecycle with LLM Cost Guard. This includes details by use case, user, or by Dataiku project. A fully auditable log of who is using which LLM and service for what purpose allows for cost tracking and internal re-billing. Not to mention improved decision making on the number of LLMs used, procurement negotiations, and LLM access management.

Compare Across Providers & Models

Organizations are increasingly finding that they cannot rely on a single LLM for all Generative AI-powered applications. Rather, different use cases and tasks require different models. And with costs and performance between models varying considerably, the need for flexibility across providers is even more salient.

LLM Cost Guard allows for robust LLM monitoring regardless of models, methods, or technical approaches. Have a global view of costs, proactively anticipating and managing financial impact for all use cases across the enterprise

Include Cost in Your LLMOps Strategy

Deliver LLM and Generative AI solutions safely and securely in an enterprise setting with the right level of oversight.

LLM Cost Guard is part of the larger Dataiku LLM Guard Services, which provide wider LLM monitoring tools beyond cost. With LLM Guard Services, teams can ensure continuous improvement and a feedback loop by:

- Monitoring resource usage

- Detecting and handling personally identifiable information (PII)

- Detecting toxicity

- Preventing forbidden terms

Bonus: Cache Responses for Cost Savings

Cost monitoring is important, but saving costs wherever possible is also crucial for teams leveraging LLMs.

The Dataiku LLM Mesh provides the option to cache responses to common queries. That means no need to regenerate responses, offering both cost savings and a performance boost. In addition, if self-hosting, you have the power to cache local Hugging Face models to reduce the cost to your infrastructure. All resulting savings are made available in the LLM Cost Guard, allowing you to refine and reinforce your caching strategies.

Leverage the Power of the LLM Mesh

LLM Guard Services, including LLM Cost Guard, are extensions of the Dataiku LLM Mesh. The LLM Mesh is changing how data and IT teams use Generative AI models and services for real-world applications, making it scalable and safe. Dataiku designed the LLM Mesh to:

- Decouple the application and service layers. With an unparalleled breadth of connectivity to today’s most in-demand LLM providers, teams can design the best possible Generative AI applications. And, they can maintain them easily in production.

- Enforce a secure gateway. The LLM Mesh acts as a secure API gateway to break down hard-coded dependencies and manage and route requests between applications and underlying services.

…and much more. For example, the LLM Mesh provides integration with vector databases. Users can easily implement a full retrieval augmented generation (RAG) use case with no code needed.

Setup in Minutes,

Not Weeks or Months

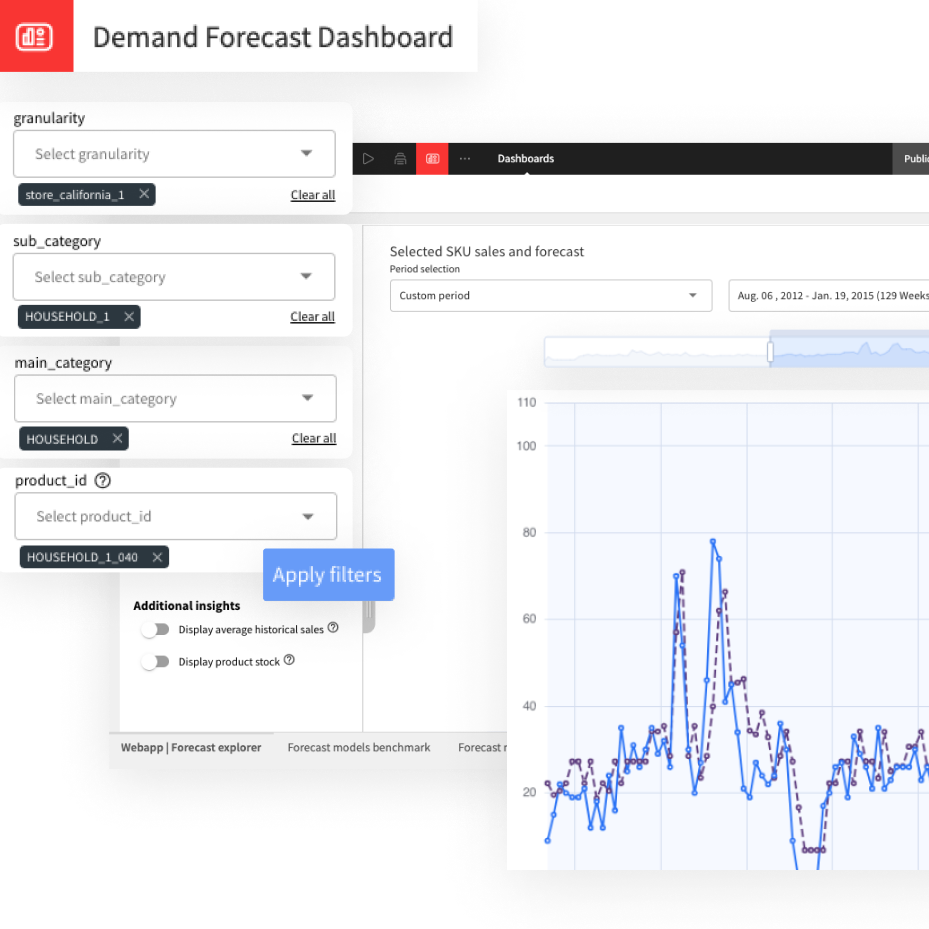

Easily connect to your logs whatever the underlying infrastructure and LLM strategy — without writing a single line of code. LLM Cost Guard comes out of the box with ready-to-use dashboards for immediate value on LLM cost monitoring.

Make It Your Own

LLM Cost Guard is ready for use as-is, but you can also customize it to meet your specific needs. For example, leverage Dataiku Scenarios to set up alerts around usage and cost. Alerts allow for a more proactive approach as well as empower users to make smarter decisions about how they use LLMs.

From Analytics to Generative AI

Why use Dataiku for your Generative AI and LLM use cases? Because the most powerful way to leverage Generative AI is in tandem with traditional machine learning (ML) techniques.

Dataiku is the platform for Everyday AI, enabling data experts and domain experts to work together to build data into their daily operations. From data preparation capabilities to machine learning lifecycle monitoring with MLOps, DataOps, model deployment and more. Dataiku is the one, central platform for data work (including Generative AI) across organizations.

The Total Economic Impact™️

Of Dataiku

A composite organization in the commissioned study conducted by Forrester Consulting on behalf of Dataiku saw the following benefits:

reduction in time spent on data analysis, extraction, and preparation.

reduction in time spent on model lifecycle activities (training, deployment, and monitoring).

return on investment

net present value over three years.